Carrying on from last night: I could’t get the separating hyperplane (aka decision line) to draw with this code:

Apparently, the sample codes in git for chapter 2 do work. So what went wrong??!

heights.weights <- transform(heights.weights,

Male = ifelse(Gender == 'Male', 1, 0))

logit.model <- glm(Male ~ Weight + Height,

data = heights.weights,

family = binomial(link = 'logit'))

ggplot(heights.weights, aes(x = Height, y = Weight)) +

geom_point(aes(color = Gender, alpha = 0.25)) +

scale_alpha(guide = "none") +

scale_color_manual(values = c("Male" = "black", "Female" = "gray")) +

theme_bw() +

stat_abline(intercept = -coef(logit.model)[1] / coef(logit.model)[2],

slope = - coef(logit.model)[3] / coef(logit.model)[2],

geom = 'abline',

color = 'black')

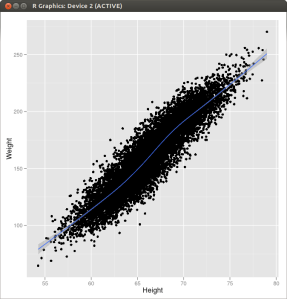

Seems that I wrote the logit.model part wrongly. Correcting the order for the Male ~ Weight + Height part did the trick.

heights.weights <- transform(heights.weights,

Male = ifelse(Gender == 'Male', 1, 0))

logit.model <- glm(Male ~ Weight + Height,

data = heights.weights,

family = binomial(link = 'logit'))

ggplot(heights.weights, aes(x = Height, y = Weight, color = Gender)) +

geom_point() +

stat_abline(intercept = - coef(logit.model)[1] / coef(logit.model)[2],

slope = - coef(logit.model)[3] / coef(logit.model)[2],

geom = 'abline', color = 'black')

Dissecting the transform command a little, how DOES it work?

> heights.weights[1:10,] Gender Height Weight 1 Male 73.84702 241.8936 2 Male 68.78190 162.3105 3 Male 74.11011 212.7409 4 Male 71.73098 220.0425 5 Male 69.88180 206.3498 6 Male 67.25302 152.2122 7 Male 68.78508 183.9279 8 Male 68.34852 167.9711 9 Male 67.01895 175.9294 10 Male 63.45649 156.3997 > heights.weights <- transform(heights.weights, + Male = ifelse(Gender == 'Male', 1, 0)) > heights.weights[1:10,] Gender Height Weight Male 1 Male 73.84702 241.8936 1 2 Male 68.78190 162.3105 1 3 Male 74.11011 212.7409 1 4 Male 71.73098 220.0425 1 5 Male 69.88180 206.3498 1 6 Male 67.25302 152.2122 1 7 Male 68.78508 183.9279 1 8 Male 68.34852 167.9711 1 9 Male 67.01895 175.9294 1 10 Male 63.45649 156.3997 1

Ok, so it just created a column (“tag”) which is populated according to the contents of the first column (a factor, or categories).

With this, the glm command (generalized linear models) command makes more sense, though I still have little-to-no idea what the parameters mean even after reading the help pages…

Anyway, onwards to chapter 3, binary classification! We’ll see if there’s a need to learn glm in-depth by myself later.